Anthropic

About Anthropic

Anthropic is an artificial intelligence (AI) safety and research company focused on developing reliable, interpretable, and steerable AI systems. The firm conducts foundational research in AI alignment and builds large-scale AI models designed to be safer by construction, with a particular emphasis on robust behavior and reduced risk of harmful outputs. Anthropic’s work spans deep technical research, model development, and practical tools that address key challenges in deploying AI responsibly.

Overview

Anthropic’s mission centers on advancing AI capabilities while prioritizing safety, transparency, and alignment with human values. The company designs AI models that are intended to be more predictable, controllable, and understandable than traditional large language models (LLMs). Anthropic’s research and products aim to support developers, enterprises, and institutions in adopting powerful AI tools with mitigations for ethical and safety risks.

History and Background

Anthropic was founded in 2021 by former researchers and engineers from leading AI organizations, including OpenAI. The founding team brought deep expertise in machine learning, AI safety, and large-scale model engineering, motivated by concerns about existential and societal risks posed by increasingly capable AI systems. Since its inception, Anthropic has focused on both theoretical research and practical implementations of its safety-centric approaches.

The company gained attention in the AI community with the release of Claude, its family of large language models designed to exhibit more controlled and interpretable behavior. Anthropic’s research publications and open dialogues with policymakers, academics, and industry stakeholders have contributed to broader discussions on AI governance and responsible innovation.

Core Products and Services

- Claude AI Models: A suite of large language models engineered for safety and alignment, offering capabilities for natural language understanding, generation, and reasoning while reducing harmful or biased outputs.

- Developer APIs: Access to Anthropic’s models through API services that enable integration into enterprise applications, automation workflows, and customer-facing tools.

- AI Safety Research: Ongoing work in interpretability, robustness, and alignment methodologies to inform best practices for deploying AI systems responsibly.

- Collaboration and Policy Engagement: Partnerships with academic institutions, industry consortia, and policymakers to shape AI safety standards and ethical guidelines.

Technology and Features

- Architectures designed for steerability, allowing users to influence and constrain model behavior in predictable ways.

- Techniques for improved interpretability, helping developers and researchers understand internal model decision-making processes.

- Safety training paradigms aimed at minimizing harmful, unsafe, or biased outputs.

- Scalable infrastructure to support enterprise usage and real-time AI applications.

Use Cases and Market Position

Anthropic’s models and tools support a range of applications across industries:

- Enterprise AI Integration: Embedding Claude models into customer service, knowledge retrieval, and productivity tools.

- Education and Research: Assisting institutions with research support, content synthesis, and analysis of complex datasets.

- Automation and Workflow Optimization: Enabling business process automation while maintaining safety constraints in generated outputs.

- AI Safety Platforms: Serving as a resource for organizations seeking models with explicit alignment mechanisms and interpretability features.

Funding and Team

Anthropic has raised substantial capital from prominent investors interested in AI safety and long-term innovation. Backers include venture capital firms and technology companies supportive of the company’s mission to balance cutting-edge capability with risk mitigation. The leadership team comprises experts in machine learning research, safety, and product engineering, with a track record in both academic and industrial AI development.

Risks and Considerations

Anthropic’s focus on safety and alignment reflects broader concerns in the AI industry about the societal impacts of powerful models. Key considerations include the challenges of balancing capability and risk mitigation, competition from other AI developers, and evolving regulatory environments surrounding AI deployment. Organizations adopting Anthropic’s technologies should assess implementation risks, ethical considerations, and governance frameworks tailored to their use cases.

Anthropic News

How Anthropic stopped AI agents working for Chinese state-sponsored spy campaign

Anthropic foiled a Chinese state-backed cyber-espionage attack powered by its own AI agents, with "significant implications" for cybersecurity.

- Anthropic CEO calls for AI transparency, argues against Trump bill’s decade-long state regulatory freeze

Anthropic CEO Dario Amodei advocated for federal AI oversight to prevent stagnation in state regulation due to Trump bill.

- AB DAO and AB Charity Foundation Join Forces to Build a Trustworthy Infrastructure and Promote Global Philanthropic Transformation

The forum explored how blockchain and AI can enhance transparency and empower global philanthropy through innovative solutions.

- Amazon completes $4 billion deal with Anthropic, deepening strategic AI partnership

The company has invested another $2.75 billion following an earlier $1.25 billion injection in the company in 2023.

Anthropic Team

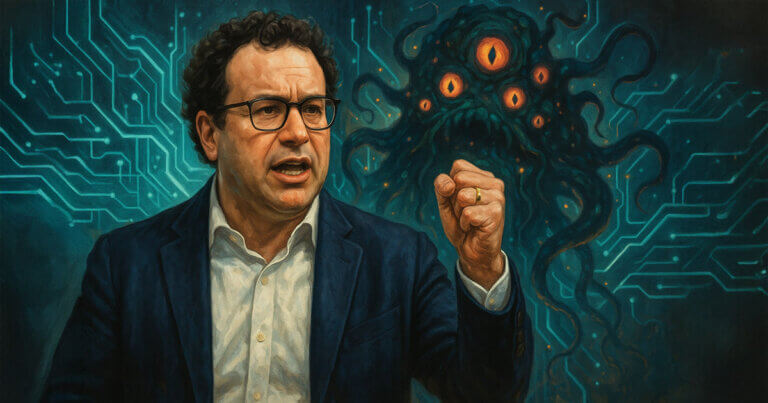

Dario Amodei

CEO and Co-founder

Daniela Amodei

President and Co-founder

Jack Clark

Co-founder

Jared Kaplan

Co-founder

Anthropic Support

All images, branding and wording is copyright of Anthropic. All content on this page is used for informational purposes only. CryptoSlate has no affiliation or relationship with the company mentioned on this page.